Unlocking the Power of LlamaIndex: A Step-by-Step Guide to Building AI Workflows in TypeScript

Introduction: What Is LlamaIndex?

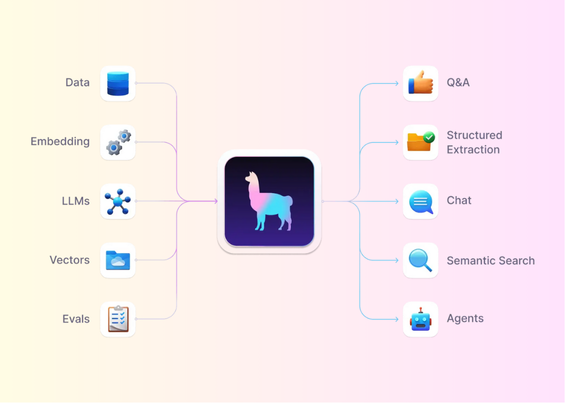

LlamaIndex is an advanced framework designed to help developers seamlessly integrate Large Language Models (LLMs) like GPT-4 with structured data, creating highly customizable AI-driven applications. It simplifies the process of loading, parsing, indexing, and querying data, making it invaluable for enterprises seeking more efficient AI solutions.

Key Use Case: Multi-agent systems. LlamaIndex allows multiple agents to collaborate on tasks, leveraging tools like Typescript to create robust workflows, automate decision-making, and enhance scalability. With the ability to orchestrate tasks, manage state, and handle complex queries, LlamaIndex is ideal for companies aiming to deploy AI systems that mimic human collaboration.

But how exactly does LlamaIndex work, and how can you leverage it for your projects? Let’s dive into building a multi-agent workflow using TypeScript.

Why Use LlamaIndex?

LlamaIndex isn’t just a simple AI tool; it’s a framework that integrates with multiple agents, making complex task management more efficient. Whether you’re developing AI-driven customer support or advanced financial systems, the power of multi-agent collaboration makes LlamaIndex a go-to choice for developers looking to scale their applications.

- Data Augmentation: LlamaIndex ingests, structures, and queries private or domain-specific data.

- Multi-Agent Collaboration: Multiple agents can work together on a shared task, making it ideal for distributed systems.

- Integrations: Supports Python and TypeScript for diverse environments, from FastAPI to Node.js.

Now, let’s look at how you can build your own multi-agent workflow using LlamaIndex and TypeScript.

Creating Your First Multi-Agent Workflow with TypeScript

Step 1 – Setting Up the Environment

First, ensure you have a development environment ready with Node.js and TypeScript. You’ll also need the LlamaIndex TypeScript library (LlamaIndex.TS), which you can install via npm:

npm install llamaindex-tsYou’ll also need an OpenAI API key, or you can opt for a local model if you’re working on internal data.

Step 2 – Using create-llama to Generate an App

LlamaIndex offers a command-line tool, create-llama, which simplifies the process of setting up a full-stack app. Here’s how to create a project:

npx create-llamaThis tool will prompt you to choose your preferred backend, such as Next.js, Express, or Python’s FastAPI. For this example, select the Next.js backend, which works perfectly with LlamaIndex.TS.

Once generated, you’ll find that your app includes pre-configured API integrations, enabling seamless chat with your data.

Building a Multi-Agent Workflow

Step 3 – Defining Agents and Tools

LlamaIndex’s true power lies in multi-agent orchestration. An agent in LlamaIndex is a semi-autonomous AI that handles specific tasks using tools provided by you.

Here’s how you can set up a simple math agent:

import { OpenAIAgent, FunctionTool } from 'llamaindex';

const sumTool = new FunctionTool({

name: "sum",

description: "Adds two numbers",

parameters: {

type: "object",

properties: {

a: { type: "number", description: "First number" },

b: { type: "number", description: "Second number" },

},

},

});

const multiplyTool = new FunctionTool({

name: "multiply",

description: "Multiplies two numbers",

parameters: {

type: "object",

properties: {

a: { type: "number", description: "Number to multiply" },

b: { type: "number", description: "Multiplier" },

},

},

});

const agent = new OpenAIAgent({

tools: [sumTool, multiplyTool],

verbose: true,

});Now your agent can perform tasks like addition and multiplication by choosing the appropriate tool based on the input.

Step 4 – Loading and Querying Data

For more complex applications, you’ll likely want agents to interact with your data. LlamaIndex allows you to load and query documents directly using a vector index.

import { SimpleDirectoryReader, VectorStoreIndex } from 'llamaindex';

const documents = await new SimpleDirectoryReader().loadData({

directoryPath: "path_to_your_data",

});

const vectorIndex = await VectorStoreIndex.fromDocuments(documents);

const queryEngine = vectorIndex.asQueryEngine();This allows your agent to access and answer questions based on the data it has indexed.

Orchestrating Multi-Agent Workflows

In more complex systems, multiple agents collaborate on a task. This is where orchestration comes into play. The orchestration agent decides which agent should handle the next step in the workflow.

For instance, if one agent checks an account balance, another agent can handle a money transfer:

import { OrchestrationAgent, Workflow } from 'llamaindex';

class BankingWorkflow extends Workflow {

@step()

async checkBalance(ev: StartEvent) {

return new StopEvent({ result: "Account balance is $1000" });

}

@step()

async transferMoney(ev: StopEvent) {

return new StopEvent({ result: "Transferred $500" });

}

}

await deploy_workflow(BankingWorkflow);In this case, orchestration ensures that tasks are completed in the right order, even if multiple agents are required to finish them.

Scaling Your Workflow with Llama-Deploy

For production environments, you’ll want your workflows to be scalable and fault-tolerant. Enter llama-deploy, which allows you to turn your agents and workflows into microservices. This microservice architecture ensures high scalability, resilience, and flexibility.

Llama-deploy can be set up as follows:

from llama_deploy import deploy_core, deploy_workflow

async def main() {

await deploy_core()

await deploy_workflow(BankingWorkflow)

}Once deployed, your system can handle real-time operations and easily scale as your application grows.

Conclusion: The Future of AI Workflows

By combining the multi-agent capabilities of LlamaIndex with the flexibility of TypeScript and microservice-based deployment, you can build highly efficient, scalable, and customizable AI applications. Whether it’s building customer support bots, financial services, or large-scale data processors, LlamaIndex provides a powerful toolset to bring your AI workflows to life.

Ready to start building? Give LlamaIndex a try and discover how easily you can create next-level AI-powered workflows that will revolutionize your business.